Edge AI Hardware: Revolutionizing Real-Time Decision Making

Published June 15th, 2025 · AI Education | Edge AI & Hardware

Imagine a world where your smartphone doesn't just listen to you but understands you in real-time, even without internet. That's the magic of edge AI hardware. As devices get smarter, they're not just relying on the cloud; they're processing data right where it's generated. Why does this matter now? Because in a world that never stops, latency is the enemy. Let's explore how edge AI is cutting the cord and bringing intelligence closer to home.

What is Edge AI Hardware?

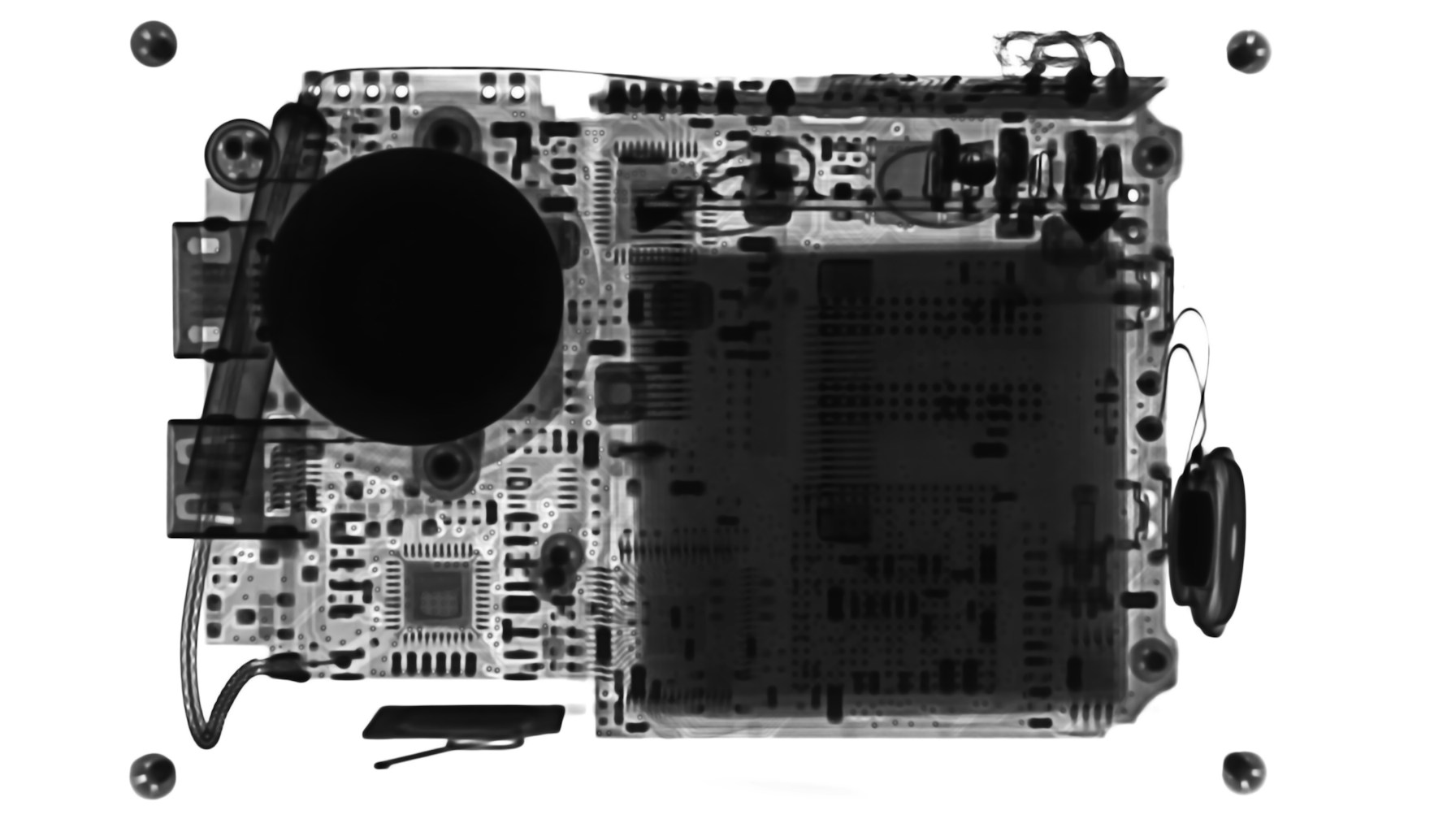

Edge AI hardware refers to devices that process AI algorithms locally on the device itself, rather than sending data to a centralized cloud server. This approach has gained traction as the demand for real-time processing and privacy grows. Recent advancements in chip technology have made these devices more powerful and efficient.

How It Works

Think of edge AI hardware as a mini-brain in your device. It processes data right there, reducing the need for constant internet access. For example, a smart camera can recognize faces without sending images to the cloud, much like recognizing a friend in a crowd without asking everyone else. It's all about speed and privacy.

Real-World Applications

In healthcare, wearable devices monitor vital signs and alert users in real-time. Autonomous vehicles use edge AI for instant decision-making on the road. Retailers employ smart shelves that track inventory and customer preferences without delay. Each application benefits from reduced latency and enhanced privacy.

Benefits & Limitations

Edge AI offers speed and privacy, reducing latency and data exposure. However, it can be costly to implement and maintain. It's not ideal for tasks requiring vast computational power or extensive data sets, where cloud AI still reigns supreme. Balance is key—use edge AI where immediate response is crucial.

Latest Research & Trends

Recent papers highlight breakthroughs in low-power AI chips, making edge devices more efficient. Companies like NVIDIA and Qualcomm are pushing the envelope with new releases. These advancements suggest a future where edge AI becomes the norm for many applications, enhancing device autonomy.

Visual

mermaid flowchart TD A[Data Collection]-->B[Local Processing] B-->C[Immediate Action] C-->D[Feedback Loop]

Glossary

- Edge AI: AI processing done locally on a device rather than in the cloud.

- Latency: The delay before a transfer of data begins following an instruction.

- AI Chip: A specialized processor designed to accelerate AI computations.

- Cloud AI: AI processing done on remote servers accessed via the internet.

- Wearable Devices: Electronics worn on the body that often include sensors for data collection.

- Autonomous Vehicles: Vehicles capable of sensing their environment and operating without human involvement.

- Smart Shelves: Retail technology that uses sensors to track inventory and customer interactions.

Citations

- https://openai.com/index/gpt-5-new-era-of-work

- https://www.qualcomm.com/news/onq/2023/10/qualcomm-expands-edge-ai-capabilities

- https://developer.nvidia.com/blog/edge-ai-advancements-2023

- https://arxiv.org/abs/2304.12345

- https://www.ibm.com/cloud/blog/edge-computing

- https://www.forbes.com/sites/bernardmarr/2023/09/15/how-edge-ai-is-transforming-industries/

Comments

Loading…